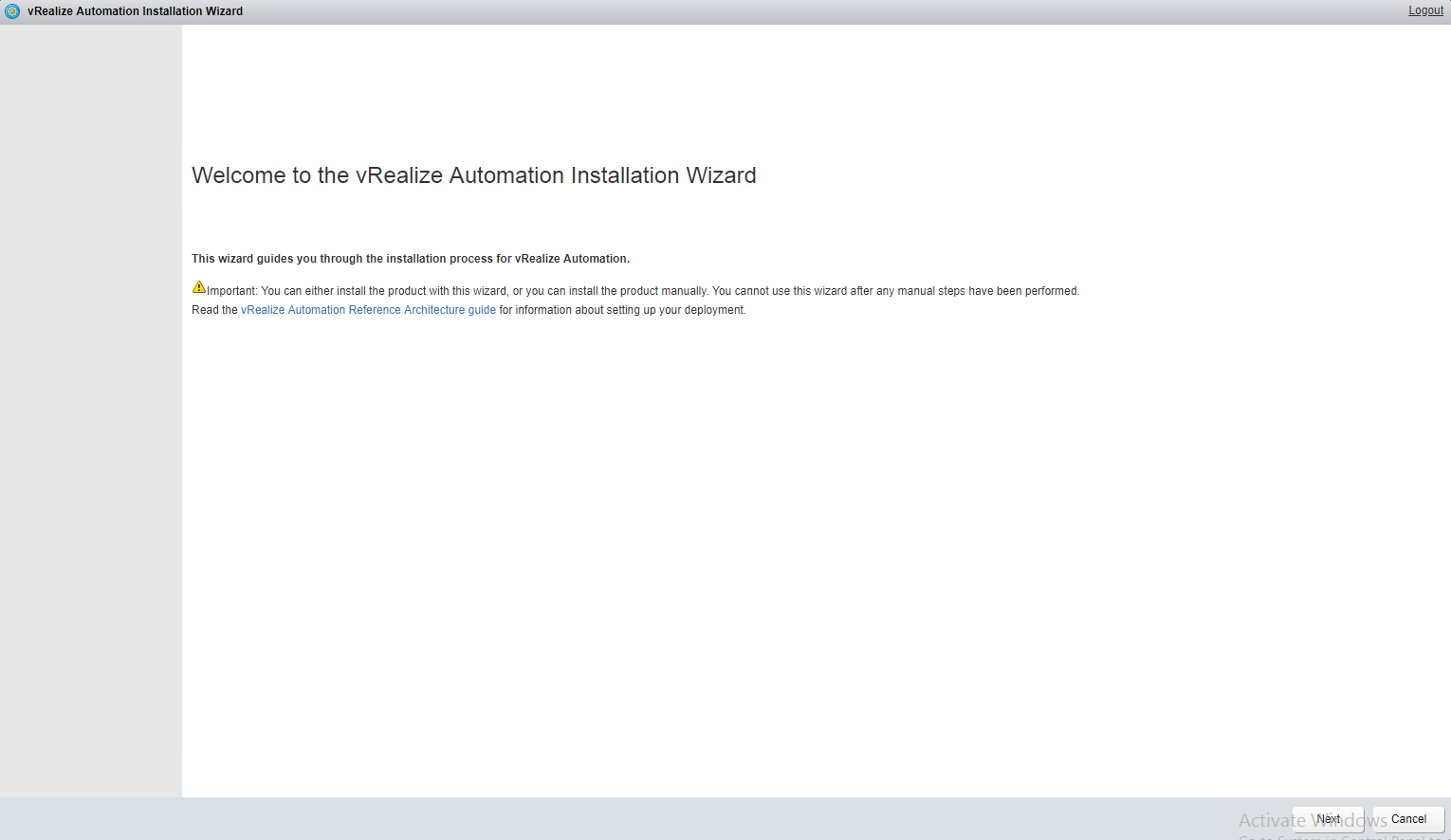

In this post, I will walk through step by step installation for the vRealize Operations Manager Tenant App for vCloud Director. But before I jump into the lab, I want to take a moment to explain what this solution is all about and what it looks like from an architectural point of view.

What is vROPs Tenant App for vCloud Director?

vROPs Tenant App is a solution that helps in exposing vRops performance metrics to tenants in a vCD environment. Each tenant can only see metrics data relevant to their organization.

From a service provider point of view, this is an awesome solution as Tenant App enables tenants to have complete visibility of performance metrics of their environment. If an environment is not performing as per expectations, tenants can leverage this solution to root cause analysis of performance issues and they can perform L1-L2 level of maintenance/troubleshooting tasks themselves without raising service tickets with service providers. … Read More