To test TKGm 1.5.1 against the latest version of nSX ALB, I upgraded my ALB deployment to 21.1.3. The deployment of the TKG management and workload cluster went smoothly.

However, when I deployed a sample load balancer application that uses a dedicated SEG and VIP network, the service was waiting for an external IP assignment.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

root@linjump [ ~ ]# kubectl get all NAME READY STATUS RESTARTS AGE pod/http-deployment-785df98964-dhvqj 1/1 Running 0 104s pod/http-deployment-785df98964-xf6hk 1/1 Running 0 104s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/l4-svc LoadBalancer 100.70.116.153 <pending> 80:31082/TCP 103s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/http-deployment 2/2 2 2 104s NAME DESIRED CURRENT READY AGE replicaset.apps/http-deployment-785df98964 2 2 2 104s |

The virtual service never got created, and hence no SE VMs for virtual service placement were deployed.

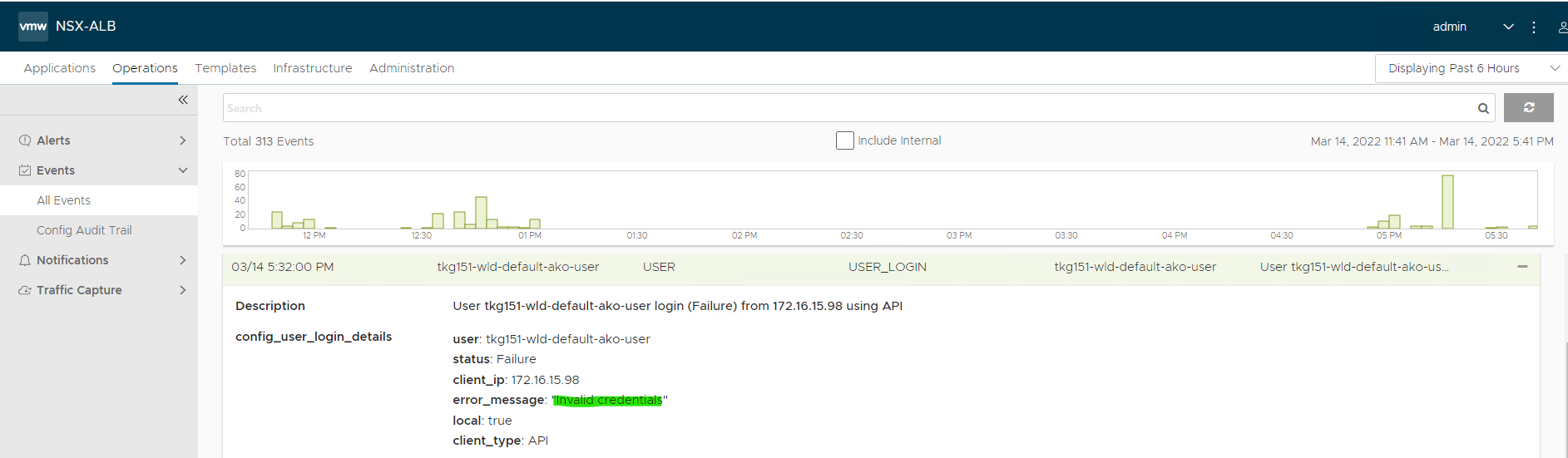

On top of that following events were re-occurring in the NSX ALB portal.

While looking for the cause of the problem, I came across VMware KB-87640, which details the additional procedures required to get TKGm 1.5.1 to function with ALB 21.1.3. As per the KB, the cause of this problem is

The problem caused by the AVI controller version didn’t get specified in management cluster AKO Operator deployment, so the AKO Operator will use the default lowest support version to send request to AVI controller, which version is not supported by AVI controller 21.1.x any more.

To fix the issue, I followed the same steps as mentioned in the KB and I am up and running again.

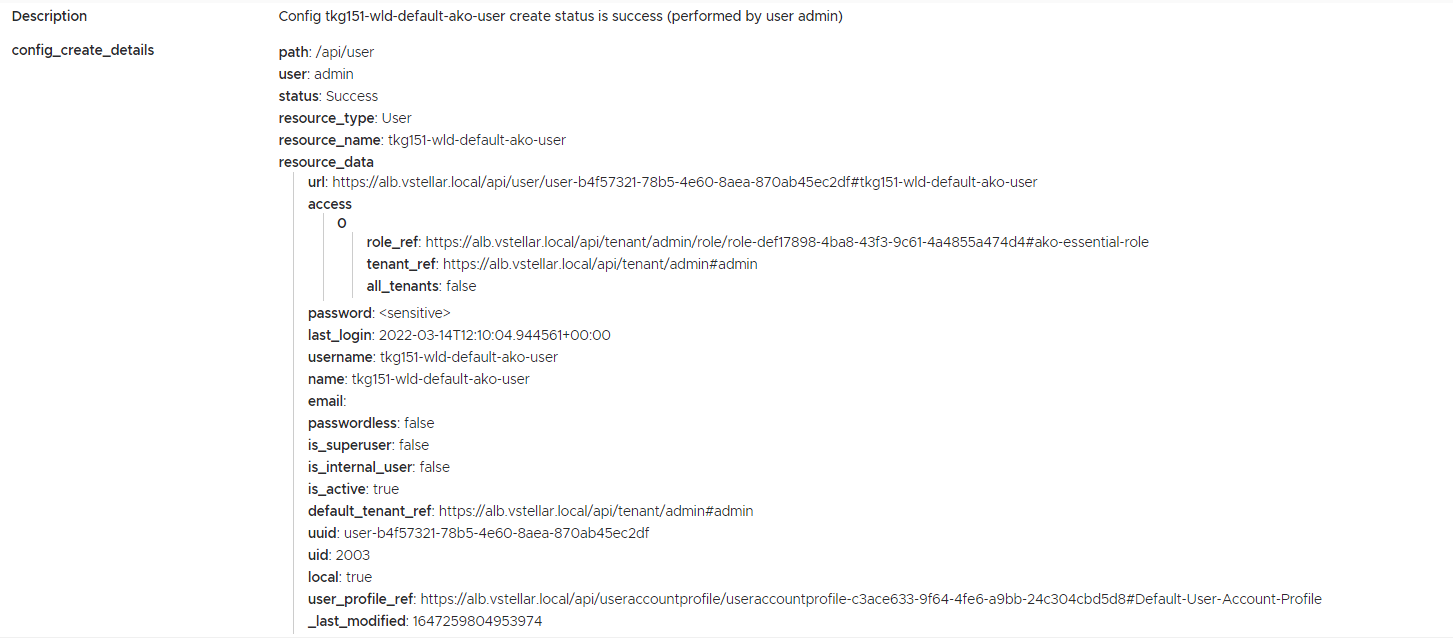

Once the ako-operator-controller-manager pod was redeployed, the virtual service got created and it triggered service engine creation. A new event was recorded in the ALB portal regarding the creation of ako-user.

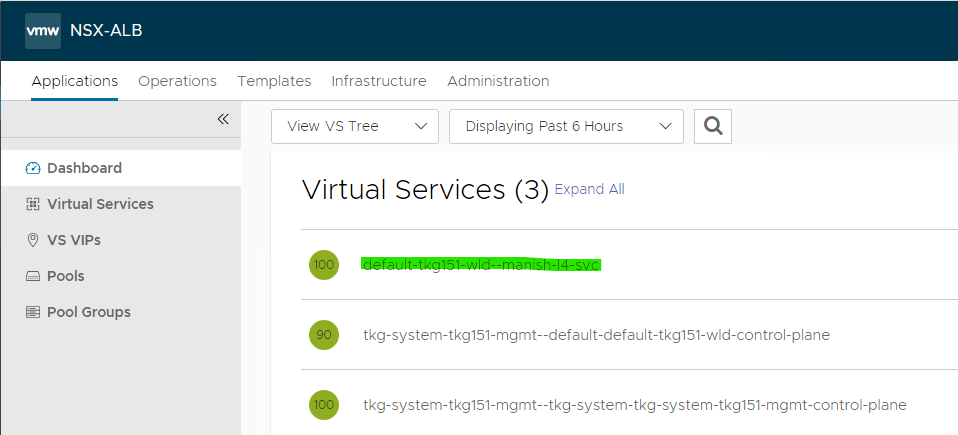

Now the VS got placed and the status is healthy.

The service received an external IP address from the appropriate VIP pool.

|

1 2 3 |

root@linjump [ ~ ]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE l4-svc LoadBalancer 100.70.116.153 172.16.18.2 80:31082/TCP 41m |

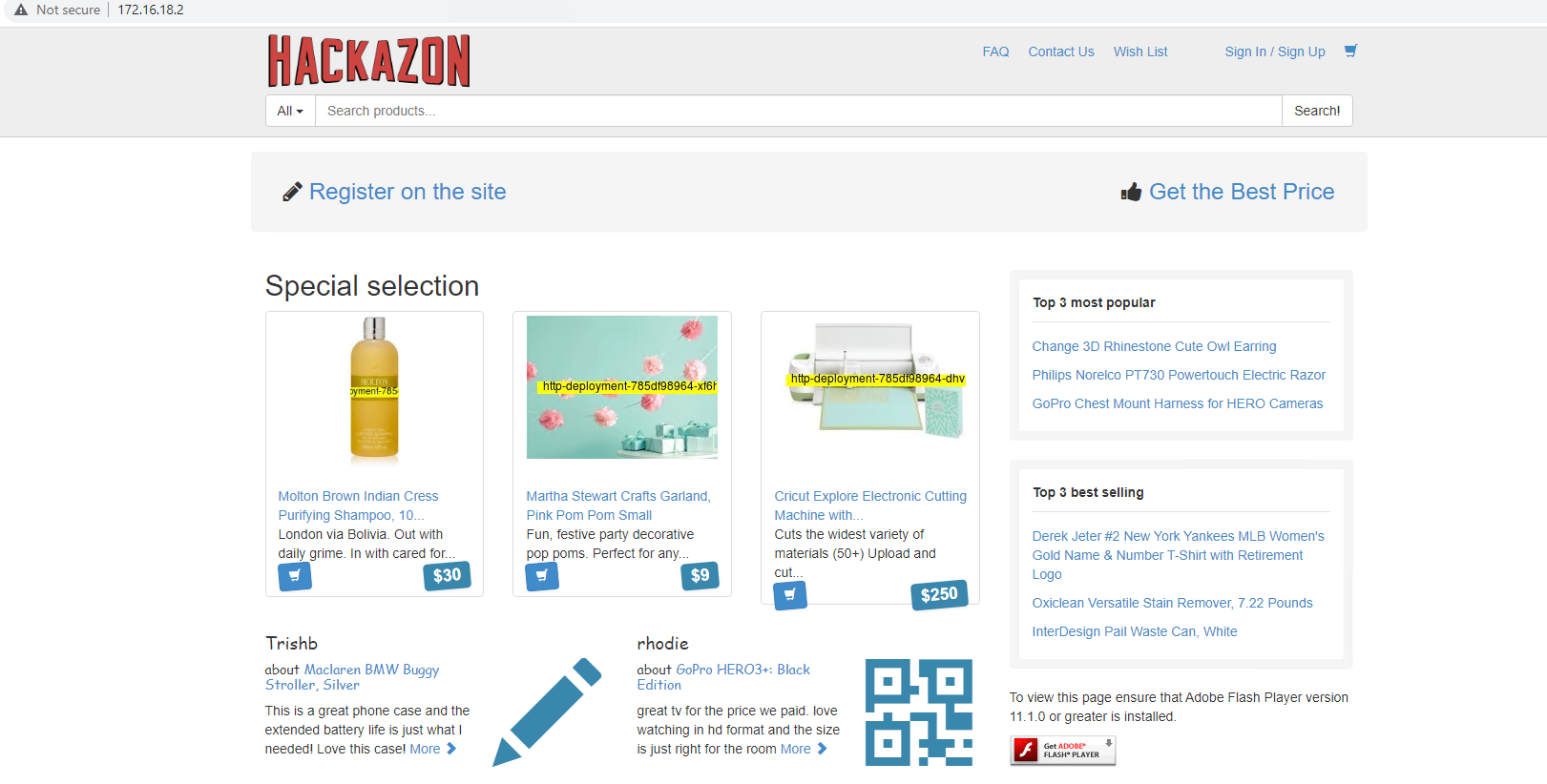

The application is also available via the service’s External-IP.

I hope you enjoyed reading this post. Feel free to share this on social media if it is worth sharing.