NSX ALB delivers scalable, enterprise-class container ingress for containerized workloads running in Kubernetes clusters. The biggest advantage of using NSX ALB in a Kubernetes environment is that it is agnostic to the underlying Kubernetes cluster implementations. The NSX ALB controller integrates with the Kubernetes ecosystem via REST API and thus can be used for ingress & L4-L7 load balancing solution for a wide variety of Kubernetes implementation including VMware Tanzu Kubernetes Grid.

NSX ALB provides ingress and load balancing functionality for TKG using AKO which is a Kubernetes operator that runs as a pod in the Tanzu Kubernetes clusters and translates the required Kubernetes objects to Avi objects and automates the implementation of ingresses/routes/services on the Service Engines (SE) via the NSX ALB Controller.

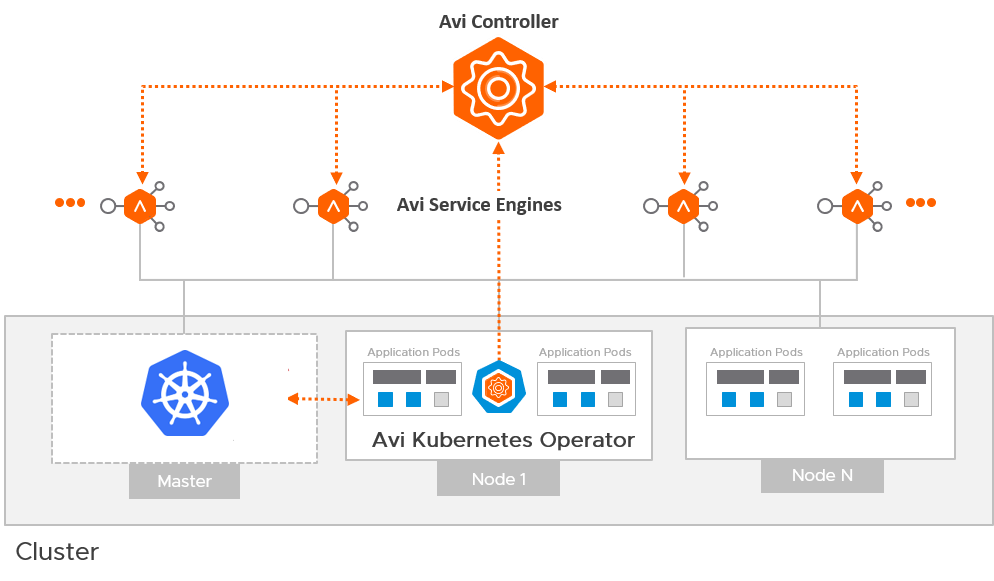

The diagram below shows a high-level architecture of AKO interaction with NSX ALB.

AKO interacts with the Controller & Service Engines via API to automate the provisioning of Virtual Service/VIP etc. Each Tanzu Kubernetes Cluster will have an instance of AKO running after the cluster is deployed with NSX ALB settings.

If you are new to AKO and looking for installation steps, please have a look at my previous article on this topic.

AKO and TKG Relationship

When you deploy the TKG Management cluster with NSX ALB as the load balancer, the AKO Operator gets automatically deployed and is responsible for automating the deployment of AKO pods in the workload cluster.

|

1 2 3 |

[root@tkg-bootstrapper ~]# kubectl get po -n tkg-system-networking NAME READY STATUS RESTARTS AGE ako-operator-controller-manager-76f5cf9695-57cpv 2/2 Running 7 2d |

AKOO doesn’t need a Service Engine because the management cluster does not need to run any ingress service.

You can have more than one Tanzu Kubernetes cluster in your environment and based on your requirement, the clusters can share an AKO configuration or each cluster will have its own AKO configuration. If you are going for a multi-AKO configuration design, you require more Service Engines and Service Engine Groups because AKO configuration is tied to a SE/SE Group.

Here is an example of AKO running in a Tanzu Kubernetes cluster.

|

1 2 3 4 5 6 |

[root@tkg-bootstrapper ~]# kubectl get all -n avi-system NAME READY STATUS RESTARTS AGE pod/ako-0 1/1 Running 0 33h NAME READY AGE statefulset.apps/ako 1/1 33h |

To demonstrate the ingress functionality, I am using a deployment yaml created by @Trevor Spires

On applying the yaml in my workload cluster, the following items got provisioned.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

[root@tkg-bootstrapper ~]# kubectl get all -n default NAME READY STATUS RESTARTS AGE pod/lb-svc-nginx-57bf68fd8c-5xj7j 1/1 Running 0 112m pod/lb-svc-nginx-57bf68fd8c-pvlsp 1/1 Running 0 112m pod/lb-svc-nginx-old-58cb9f676d-4qzvm 1/1 Running 0 112m pod/lb-svc-nginx-old-58cb9f676d-z54jt 1/1 Running 0 112m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 100.64.0.1 <none> 443/TCP 47h service/lb-svc-1 NodePort 100.70.209.112 <none> 80:30347/TCP 112m service/lb-svc-2 NodePort 100.66.164.199 <none> 80:32657/TCP 112m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/lb-svc-nginx 2/2 2 2 112m deployment.apps/lb-svc-nginx-old 2/2 2 2 112m NAME DESIRED CURRENT READY AGE replicaset.apps/lb-svc-nginx-57bf68fd8c 2 2 2 112m replicaset.apps/lb-svc-nginx-old-58cb9f676d 2 2 2 112m |

In the above deployment, there are 2 websites. One is accessed via https://<site-url>/ and the other (old) one is accessed over https://<site-url>/old.

The following ingress is also provisioned on applying the deployment yaml.

|

1 2 3 |

[root@tkg-bootstrapper ~]# kubectl get ingress NAME CLASS HOSTS ADDRESS PORTS AGE myfirstingress <none> ingress.tkg.tanzu.lab 172.19.80.55 80 113m |

A new Virtual Service/VIP is created in the NSX ALB.

To access the newer version of the site, hit the root URL of the website.

To access the older version, access the site as shown below. Although nothing is currently provisioned, you can see ALB is steering the traffic to an older Nginx version.

That’s it for this post. I hope you enjoyed reading this post. Feel free to share this on social media if it is worth sharing.