In first post of this series, I discussed about prerequisites that you should meet before attempting to install TKG on vSphere. In this post I will demonstrate TKG management cluster deployment. We can create TKG Management cluster using both UI & CLI. Since I am a fan of cli method, I have used the same in my deployment.

Step 1: Prepare config.yaml file

When TKG cli is installed and tkg command is invoked for first time, it creates a hidden folder .tkg under home directory of user. This folder contains config.yaml file which TKG leverages to deploy management cluster.

Default yaml file don’t have details of infrastructure such as VC details, VC credentials, IP address etc to be used in TKG deployment. We have to populate infrastructure details in config.yaml file manually.

Below is the yaml file which I used in my environment.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 |

cert-manager-timeout: 30m0s overridesFolder: /root/.tkg/overrides BASTION_HOST_ENABLED: "true" NODE_STARTUP_TIMEOUT: 20m providers: - name: cluster-api url: /root/.tkg/providers/cluster-api/v0.3.6/core-components.yaml type: CoreProvider - name: aws url: /root/.tkg/providers/infrastructure-aws/v0.5.4/infrastructure-components.yaml type: InfrastructureProvider - name: vsphere url: /root/.tkg/providers/infrastructure-vsphere/v0.6.5/infrastructure-components.yaml type: InfrastructureProvider - name: tkg-service-vsphere url: /root/.tkg/providers/infrastructure-tkg-service-vsphere/v1.0.0/unused.yaml type: InfrastructureProvider - name: kubeadm url: /root/.tkg/providers/bootstrap-kubeadm/v0.3.6/bootstrap-components.yaml type: BootstrapProvider - name: kubeadm url: /root/.tkg/providers/control-plane-kubeadm/v0.3.6/control-plane-components.yaml type: ControlPlaneProvider images: all: repository: registry.tkg.vmware.run/cluster-api cert-manager: repository: registry.tkg.vmware.run/cert-manager tag: v0.11.0_vmware.1 release: version: v1.1.2 VSPHERE_SERVER: tkg-vc01.manish.lab VSPHERE_USERNAME: administrator@vsphere.local VSPHERE_PASSWORD: <encoded:Vk13YXJlMSE=> VSPHERE_DATACENTER: /BLR-DC VSPHERE_DATASTORE: /BLR-DC/datastore/vsanDatastore VSPHERE_NETWORK: WLD-NW VSPHERE_RESOURCE_POOL: /BLR-DC/host/TKG-Cluster/Resources/TKG-RP VSPHERE_FOLDER: /BLR-DC/vm/TKG-Infra VSPHERE_WORKER_NUM_CPUS: "1" VSPHERE_WORKER_MEM_MIB: "2048" VSPHERE_WORKER_DISK_GIB: "20" VSPHERE_CONTROL_PLANE_NUM_CPUS: "1" VSPHERE_CONTROL_PLANE_MEM_MIB: "2048" VSPHERE_CONTROL_PLANE_DISK_GIB: "20" VSPHERE_HA_PROXY_NUM_CPUS: "1" VSPHERE_HA_PROXY_MEM_MIB: "2048" VSPHERE_HA_PROXY_DISK_GIB: "20" VSPHERE_HAPROXY_TEMPLATE: /BLR-DC/vm/TKG-Templates/photon-3-haproxy-v1.2.4 VSPHERE_SSH_AUTHORIZED_KEY: ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDVLFUIW0ilHi28uEqXGbmIxiaLW1i9yJU8jFRtmc4CiB2CBOZWWFup1rO27fpqjSVR1xamt5hUyd827LnQqTXfI4p10CUNOYN2nSDc9i5ARGbRb/ZzUzreV45ociSbgQWIb7271zaJezjPOHxSODE2caotdBbf8lmZzdqRTzmJbXxh9Ba7MLlDSr7w32WOQM487Djlvzq5vdd6v0gsD0+z4mlaI5J25JjymUhRG5gG/V6FWN2MqVZhHA6nCq1H+3864ZCb7jwQjtYPFqE+F80agTLgIKLZDb+XR2rnvIhlco7ne+/+/VHwngs5cHa41Q9RF+fEPVUSLh3EpAbidfAd2j2mJqLdZN/6DkUQiI/AhA8fLR9qLi4ngNWkqvDSXOaf7ilQKtIJ5zjtdxLJMiVS+ARaFM0lmk8l9cq6q+fKenjokFld2T0LKMpCSTBabkP8vDYCBIEZkUIqGSDU64bggWFwvFzwy0ZPu7X8KAu4jq1NzOgUXZBxhuCgeM7J0AJF1EnMBnQUBxp/13mqX9t1YbF7e6knZbJi9dBfoEQh3jVTt+6abOHk2/h+Z5G5wjAfmewUyetChMMNwfj5pfUElbq3K5cau0x7buS6jaakS11ilMguYTeim/jz0Hnfprew2ZCoXM2FHCgYC0sleDgE7is4wJgZEd8CVi1SZx9uAw== tanzu@vstellar.com SERVICE_CIDR: 100.64.0.0/13 CLUSTER_CIDR: 100.96.0.0/11 |

Note: VSPHERE_SSH_AUTHORIZED_KEY is the public key which we generated earlier on bootstrap vm. Paste output of .ssh/id_rsa.pub file in this variable.

Step 2: TKG Cluster Creation Workflow: Before attempting to deploy TKG clusters, let’s learn about the cluster creation workflow. Below sequence of events takes place during a TKG cluster provisioning:

- TKG CLI command on bootstrap vm is used to initiate creation of the TKG Management Cluster.

- On the bootstrap VM, a bootstrap Kubernetes cluster is created using kind.

- VRDs, controllers, and actuators are installed into the bootstrap cluster.

- The bootstrap cluster will provision a Management Cluster on the destination infrastructure (vSphere or AWS), installs CRDs, and then copies the Cluster API & objects into Management cluster.

- Bootstrap cluster is destroyed from bootstrap vm.

Step 3: Deploy Management Cluster

Management cluster is created using tkg init command.

# tkg init -i vsphere -p dev -v 6 –name mj-tkgm01

Here:

- -i stands for infrastructure. TKG can be deployed both on vSphere and Amazon EC2.

- -p stands for plan. For lab/poc Dev plan is sufficient. In prod environment, we use plan “Production“.

Note: In Dev plan, one control plane and one worker node is deployed. In Production plan, there are three control planes and three worker nodes.

Note: To create a management cluster with custom node size for control plane, worker, and load balancer vm’s, we can specify parameters like –controlplane-size, –worker-size, and –haproxy-size options with values of extra-large, large, medium, or small. Specifying these flags override the values provided in the config.yaml file.

Below table summarizes the resource allocation for Control plane, worker and HAProxy VM when deployed using custom size.

| Appliance Size | vCPU | Memory | Storage |

| Small | 1 | 2 | 20 |

| Medium | 2 | 4 | 40 |

| Large | 2 | 8 | 40 |

| Extra Large | 4 | 16 | 80 |

Below log entries are recorded on screen while management cluster creation is in progress.

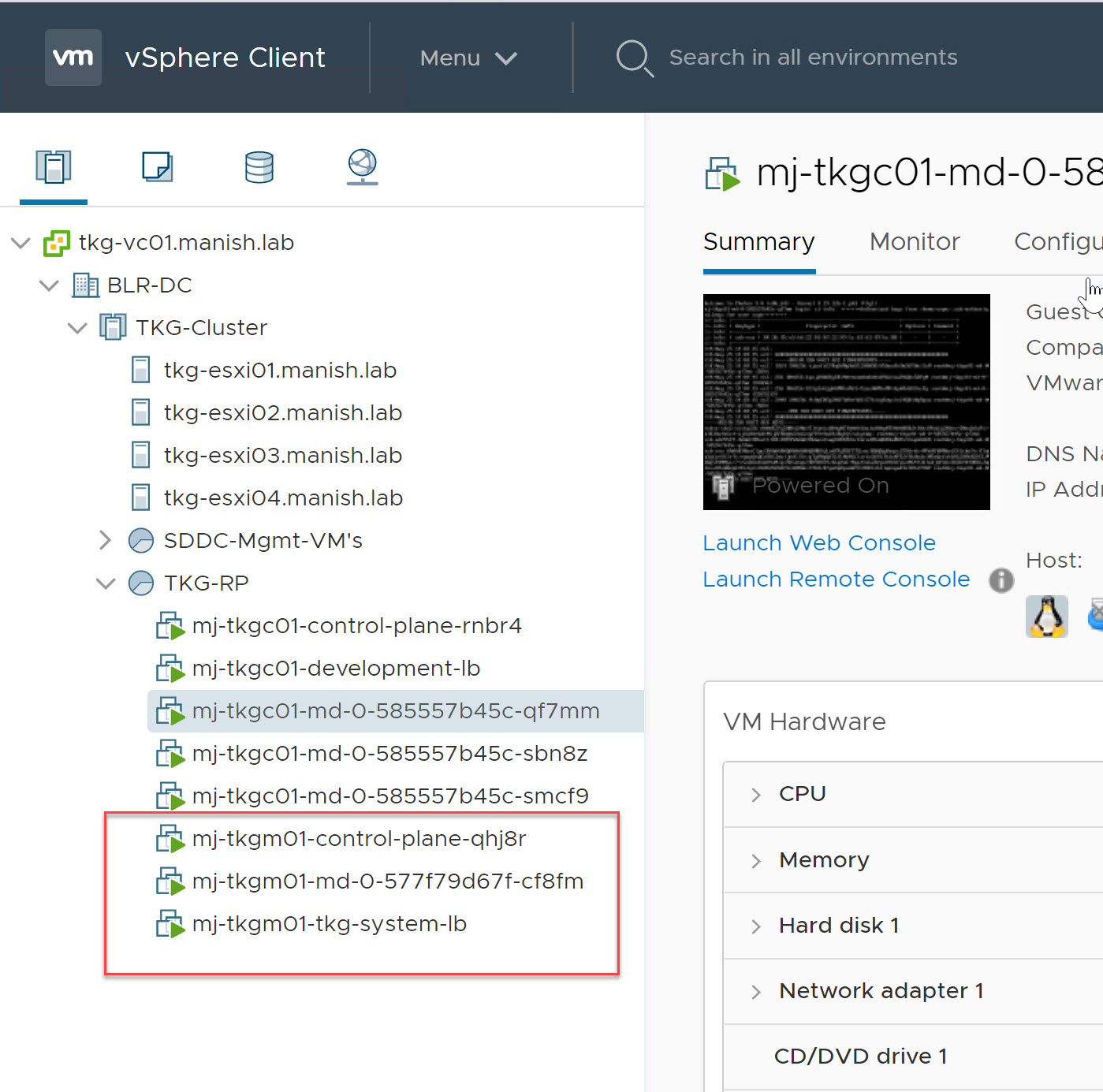

Once management cluster creation task is finished, 3 new vm’s will appear in the resource pool selected for deployment. There will be one instance of load balancer, control-plane node and worker node.

Step 4: Verify Management Cluster Details

1: List all clusters

|

1 2 3 4 5 |

root@tkg-client:~# tkg get clusters NAME NAMESPACE STATUS CONTROLPLANE WORKERS KUBERNETES mj-tkgc01 development running 1/1 3/3 v1.18.3+vmware.1 |

2: Get details of management cluster

|

1 2 3 4 5 |

root@tkg-client:~# tkg get mc MANAGEMENT-CLUSTER-NAME CONTEXT-NAME mj-tkgm01 * mj-tkgm01-admin@mj-tkgm01 |

3: Get context of all clusters

|

1 2 3 4 |

root@tkg-client:~# kubectl config get-contexts CURRENT NAME CLUSTER AUTHINFO NAMESPACE * mj-tkgm01-admin@mj-tkgm01 mj-tkgm01 mj-tkgm01-admin |

4: Use Management Cluster Context

|

1 2 |

root@tkg-client:~# kubectl config use-context mj-tkgm01-admin@mj-tkgm01 Switched to context "mj-tkgm01-admin@mj-tkgm01". |

5: Get Node Details

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

root@tkg-client:~# kubectl get nodes NAME STATUS ROLES AGE VERSION mj-tkgm01-control-plane-qhj8r Ready master 18h v1.18.3+vmware.1 mj-tkgm01-md-0-577f79d67f-cf8fm Ready <none> 18h v1.18.3+vmware.1 root@tkg-client:~# kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP mj-tkgm01-control-plane-qhj8r Ready master 18h v1.18.3+vmware.1 172.16.80.3 172.16.80.3 mj-tkgm01-md-0-577f79d67f-cf8fm Ready <none> 18h v1.18.3+vmware.1 172.16.80.4 172.16.80.4 |

6: Get all namespaces

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

root@tkg-client:~# kubectl get namespaces NAME STATUS AGE capi-kubeadm-bootstrap-system Active 18h capi-kubeadm-control-plane-system Active 18h capi-system Active 18h capi-webhook-system Active 18h capv-system Active 18h cert-manager Active 18h default Active 18h development Active 18h kube-node-lease Active 18h kube-public Active 18h kube-system Active 18h tkg-system Active 18h |

And that completes management cluster deployment. We are now ready to deploy TKG workload cluster followed by deploying applications. Stay tuned for part 3 of this series !!!!!

I hope you enjoyed reading the post. Feel free to share this on social media if it is worth sharing 🙂