In the last post of this series, we deployed RabbitMQ and integrated it with vCD.

In this post, we will deploy and configure vSphere Replication Manager (VRMS). Before deploying VRMS, let’s discuss what the role of VRMS is in a VCAV stack.

If you are not following along with this series, then I recommend reading earlier posts of this series from the links below:

1: vCloud Availability Introduction

2: vCloud Availability Architecture & Components

4: Install Cloud Proxy for vCD

6: RabbitMQ Cluster Deployment and vCD Integration

vSphere Replication Manager manages and monitors the replication process from tenant VMs to the cloud provider environment. A vSphere Replication management service runs for each vCenter Server and tracks changes to VMs and infrastructure related to replication.

VRMS, when deployed, is integrated with the resource vCenter Server, which is registered to vCloud Director, and made available to tenants. You can deploy more than one VRMS server for HA and load balancing.

There are 2 ways to deploy VRMS:

1: Deploy an OVF template directly in your management vCenter.

2: Kick the VRMS deployment from the VCAV appliance.

In this post, we will be following the second method.

The OVF templates for VRMS deployment are located in the directory /opt/vmware/share/vCAvForVCD/latest on the VCAV appliance, and we do not need to download them separately.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

root@mgmt-vcav [ /opt/vmware/share/vCAvForVCD/latest ]# ls -l total 815068 -rw-r--r-- 1 root vmware 382976 Feb 15 2018 vCloud_Availability_4vCD-support.vmdk -rw-r--r-- 1 root vmware 832709120 Feb 15 2018 vCloud_Availability_4vCD-system.vmdk -rw-r--r-- 1 root vmware 1935 Feb 15 2018 vCloud_Availability_4vCD_AddOn_OVF10.cert -rw-r--r-- 1 root vmware 260 Feb 15 2018 vCloud_Availability_4vCD_AddOn_OVF10.mf -rw-r--r-- 1 root vmware 17923 Feb 15 2018 vCloud_Availability_4vCD_AddOn_OVF10.ovf -rw-r--r-- 1 root vmware 1943 Feb 15 2018 vCloud_Availability_4vCD_Cloud_Service_OVF10.cert -rw-r--r-- 1 root vmware 268 Feb 15 2018 vCloud_Availability_4vCD_Cloud_Service_OVF10.mf -rw-r--r-- 1 root vmware 324743 Feb 15 2018 vCloud_Availability_4vCD_Cloud_Service_OVF10.ovf -rw-r--r-- 1 root vmware 1929 Feb 15 2018 vCloud_Availability_4vCD_OVF10.cert -rw-r--r-- 1 root vmware 254 Feb 15 2018 vCloud_Availability_4vCD_OVF10.mf -rw-r--r-- 1 root vmware 325475 Feb 15 2018 vCloud_Availability_4vCD_OVF10.ovf |

Before we kick off the deployment, we need to define a few variables as shown below:

|

1 2 3 4 5 6 7 8 9 10 11 |

root@mgmt-vcav [ ~ ]# echo 'vmware' > ~/.ssh/.root root@mgmt-vcav [ ~ ]# export SSO_USER=administrator@alex.lab root@mgmt-vcav [ ~ ]# export VSPHERE01_PLACEMENT_NETWORK=Mgmt-PG root@mgmt-vcav [ ~ ]# export VSPHERE01_PLACEMENT_DATASTORE=iSCSI-4 root@mgmt-vcav [ ~ ]# export VSPHERE01_ADDRESS=compute-vc01.alex.local root@mgmt-vcav [ ~ ]# export VSPHERE01_PLACEMENT_LOCATOR=Cloud-DC01/host/Compute-Cluster |

Note: Compute-vc01 is my resource vCenter server.

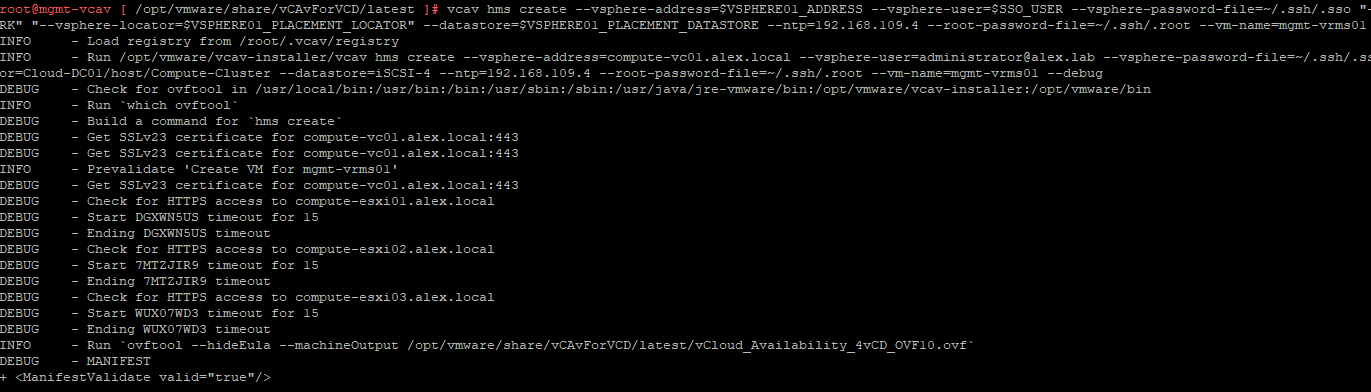

To deploy the VRMS, run the following command. Change the NTP IP and VM name as per your environment.

|

1 2 3 4 5 6 7 8 9 10 11 |

# vcav hms create --vsphere-address=$VSPHERE01_ADDRESS --vsphere-user=$SSO_USER --vsphere-password-file=~/.ssh/.sso "--network=$VSPHERE01_PLACEMENT_NETWORK" "--vsphere-locator=$VSPHERE01_PLACEMENT_LOCATOR" --datastore=$VSPHERE01_PLACEMENT_DATASTORE --ntp=pool.ntp.org --root-password-file=~/.ssh/.root --vm-name=mgmt-vrms01 --vm-address=192.168.109.40 |

And if you have not made any mistakes in defining the variables, you should see the deployment kicking in

Configure trust between VCAV and VRMS.

Connect to the VCAV appliance and run the commands below on the VCAV appliance

|

1 2 3 4 5 6 7 |

# vcav vsphere trust-ssh --vsphere-address=$VSPHERE01_ADDRESS --vsphere-user=$SSO_USER --vsphere-password-file=~/.ssh/.sso --root-password-file=~/.ssh/.root --vm-name=mgmt-vrms01 --vm-address=192.168.109.40 |

If you see an OK message in return, it means VCAV will now start trusting the VRMS.

Learnings from the Deployment

I deployed my VRMS appliance in the resource vCenter instead of the management vCenter, and it got registered to the resource vCenter. But ideally, VRMS should be deployed in the management stack. If you deploy it there, then VRMS will register as an extension to the instance of vSphere it is deployed to. This model is called in-inventory deployment.

For in-inventory deployments, the vSphere Replication Manager manages the replications to the vSphere instance it is deployed to.

So if you have deployed your VRMS in the management stack, you need to unregister it from the management vCenter by running the command:

|

1 |

# vcav hms unregister-extension –vsphere-address=$VSPHERE01_ADDRESS –vsphere-user=$SSO_USER –vsphere-password-file=~/.ssh/.sso |

So, how to deal with this situation?

Either you can deploy the VRMS appliance to a separate resource pool (that tenants are not using) in the resource vCenter and then register it with the vCenter. This model is called out-of-inventory deployment, or you can deploy it in your management VC and later unregister the extension using the command discussed above.

And that’s it for this post. In the next post of this series, we will deploy vSphere Replication Cloud Service.

I hope you enjoyed reading this post. Feel free to share this on social media if it’s worth sharing.