In part 1 of this blog series, I discussed Container Service Extension 4.0 platform architecture and a high-level overview of a production-grade deployment. This blog post is focused on configuring NSX Advanced Load Balancer and integrating it with VCD.

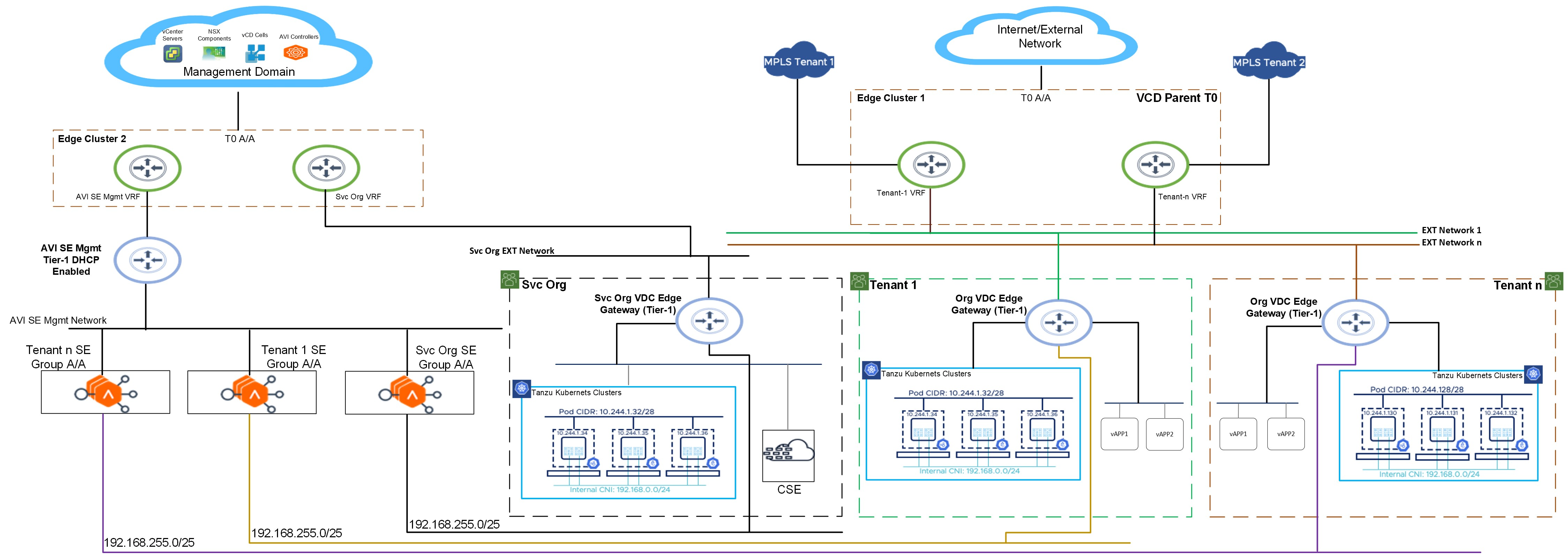

I will not go through each and every step of the deployment & configuration as I have already written an article on the same topic in the past. I will discuss the configuration steps that I took to deploy the topology that is shown below.

Let me quickly go over the NSX-T networking setup before getting into the NSX ALB configuration.

I have deployed a new edge cluster on a dedicated vSphere cluster for traffic separation. This edge cluster resides in my compute/workload domain. The NSX-T manager managing the edges is deployed in my management domain.

On the left side of the architecture, you can see I have one Tier-0 gateway, and VRFs carved out for NSX ALB and CSE networking. The ALB and CSE VRFs are BGP peering with my Top of Rack switch.

I have a DHCP-enabled Tier-1 gateway for NSX ALB SE management and a logical segment ‘ALB-SE-Mgmt’ where the management interface of the Service Engine VMs will connect. NSX ALB controllers are deployed on a VLAN-backed network in my management domain and connectivity between controllers and SE VMs is over BGP.

NSX ALB controller is deployed and the initial configuration including the following is completed:

- Initial password & backup passphrase.

- NTP & DNS configuration.

- Licensing.

- Controller cluster (optional) and HA.

- The default certificate is replaced.

- vCenter and NSX-T credentials are created.

Step 1: Configure NSX-T Cloud in NSX ALB

Under NSX-T cloud configuration, select the overlay transport zone that you have configured in NSX-T. This is the transport zone that you have configured as a network pool in VCD.

For the management & data network, you can either use the same logical segment that you have created for the SE management or you can create a dummy VIP network attached to the ALB Tier-1 gateway. The plumbing of VIP networks for Tenants is automatically done by VCD.

Step 2: Create a Service Engine Group for CSE and VCD Tenants.

Create a Service Engine Group for the VCD tenants. SEG is not mandatory for CSE, but if you are planning to deploy a Kubernetes cluster in the solution org itself to expose any centralized/shared services to the tenants, then you need to have Service Engines deployed for CSE.

Note: I am using a Dedicated Service Engine Group per tenant in my design, so each tenant will have their own Service Engine Group and Service Engine VMs.

Step 3: Configure Routing

Under the routing tab, add the default gateway address in the Global VRF Context.

Step 4: Register NSX ALB in VCD

Login to VCD with System Admin credentials and navigate to Resources > Infrastructure Resources > NSX-ALB > Controllers and click on the Add button and provide NSX ALB URL and credentials.

Step 5: Import NSX-T Cloud in VCD

Import NSX-T cloud in VCD by navigating to Resources > Infrastructure Resources > NSX-ALB > NSX-T Cloud and clicking on the ADD button.

A sample screenshot is shown below for reference.

Step 6: Import Service Engine Groups in VCD

To import Service Engine Groups in VCD, navigate to Resources > Infrastructure Resources > NSX-ALB > Service Engine Groups and click on the ADD button.

Provide the following information:

- NSX-T Cloud: Select the NSX-T Cloud that you imported in the previous step.

- Reservation Model: Dedicated.

- Name: Display name for SEG in VCD.

- Feature Set: Premium (If NSX ALB license tier is Enterprise)

- Available Service Engine Groups: Select from the available SEG in NSX ALB.

And that completes the NSX ALB configuration. In the next blog of this series, I will demonstrate the Service Provider workflow for configuring Container Service Extension.

I hope you enjoyed reading this post. Feel free to share this on social media if it is worth sharing.

One thought on “Container Service Extension 4.0 on VCD 10.x – Part 2: NSX Advanced Load Balancer Configuration”