An air gap (aka internet-restricted) installation method is used when the TKG environment (bootstrapper and cluster nodes) is unable to connect to the internet to download the installation binaries from the public VMware Registry during TKG install, or upgrades.

Internet restricted environments can use an internal private registry in place of the VMware public registry. An example of a commonly used registry solution is Harbor.

This blog post covers how to install TKGm using a private registry configured with a self-signed certificate.

Pre-requisites of Internet-Restricted Environment

Before you can deploy TKG management and workload clusters in an Internet-restricted environment, you must have:

- An Internet-connected Linux jumphost machine that has:

- A minimum of 2 GB RAM, 2 vCPU, and 30 GB hard disk space.

- Docker client installed.

- Tanzu CLI installed.

- Carvel Tools installed.

- A version of yq greater than or equal to 4.9.2 is installed.

- An internet-restricted Linux machine with Harbor installed.

- A way for TKG cluster VMs to access images in the private registry.

- A base image template containing the OS and Kubernetes versions, that will be used to deploy management & workload clusters, is imported in vSphere. Instructions for the same are here

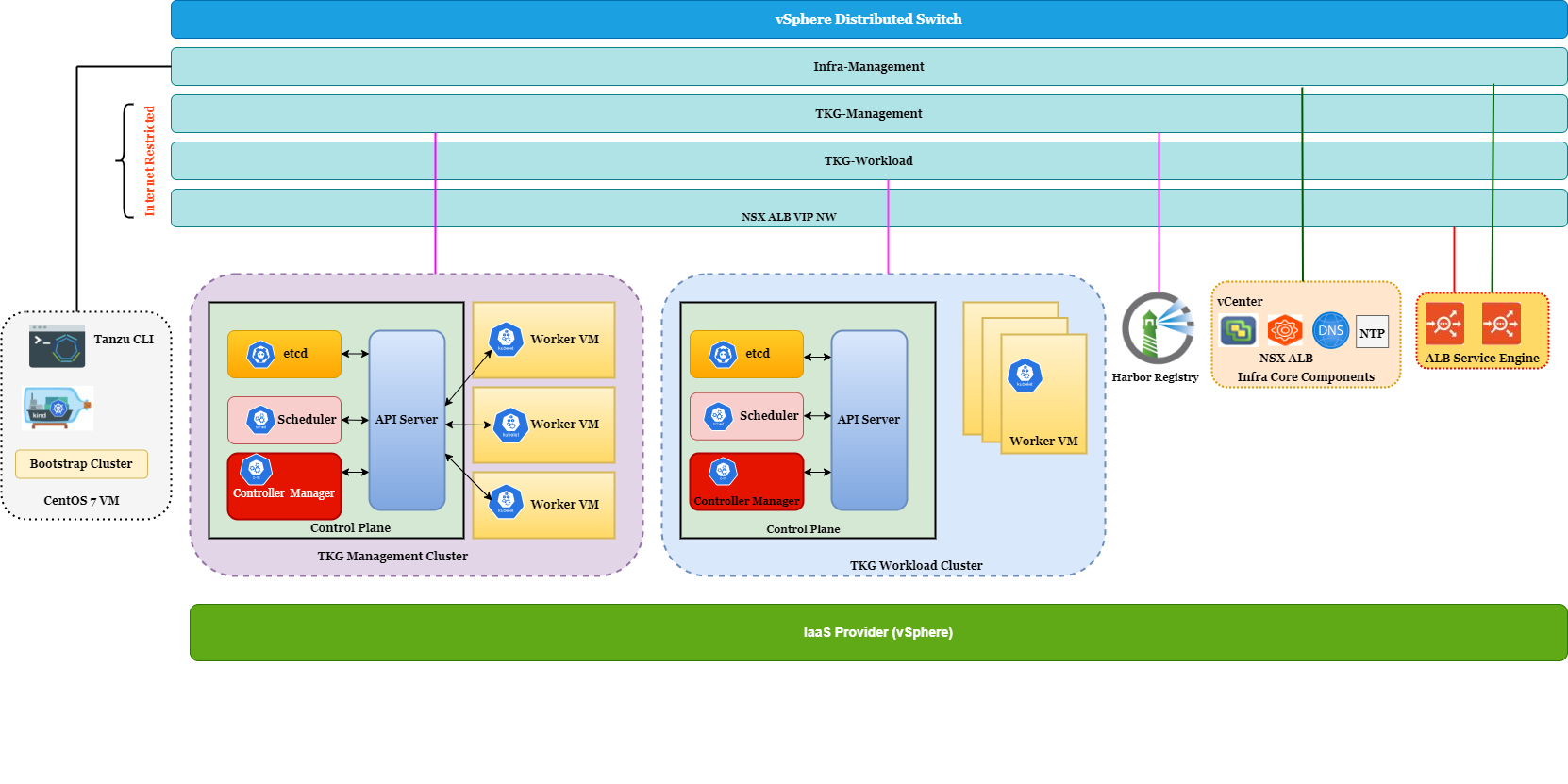

Network Layout

The below diagram shows the network layout of how components are connected with each other in my lab.

The networks utilized for TKG (management & workload clusters) deployment are not connected to the internet. All essential binaries for TKG deployment are pushed into the internal harbor registry, and during deployment, you point your cluster configuration to use the internal registry. I placed the harbor registry on the TKG management network to keep the architecture simple.

The required firewall rules for communication are created on the infrastructure’s firewall device, which is beyond the scope of this article.

It’s time to dive into the lab and see things in action.

The below steps shows the procedure for deploying TKG 1.4 in an internet restricted environment. The below steps assumes that you have already configured your vSphere environment as per TKG requirements.

Step 1: Deploy and Configure NSX Advanced Load Balancer

Please see this article for the steps of deploying and configuring NSX ALB for TKG

Step 2: Deploy and Configure Harbor Registry

I already have a post on this topic, so I am not repeating the steps.

Step 3: Deploy & Configure Linux Jumphost

In my lab, I have deployed a CentOS 7 VM which is acting as a Linux jumphost and installed Tanzu CLI, Carvel Tools, Docker client, etc. The steps are here

When the jumphost configuration is ready, execute the tanzu init and tanzu management-cluster create command. When these commands are executed for the first time, they install the necessary Tanzu Kubernetes Grid configuration files in the ~/.config/tanzu/tkg folder on the jumphost.

The script that you create and run in the subsequent steps requires TKG Bill of Materials (BoM) YAML files to be present on the jumphost. The scripts in this procedure use the BoM files to identify the correct versions of the different Tanzu Kubernetes Grid component images to pull.

Step 4: If your environment has a DNS server, please ensure that you have created a DNS entry for the harbor registry.

Step 5: Set environment variables as shown below:

5a: Set the IP address or FQDN of your local registry.

# export TKG_CUSTOM_IMAGE_REPOSITORY=”PRIVATE-REGISTRY”

Where PRIVATE-REGISTRY is the IP address or FQDN of your private registry and the name of the project. For example, registry.example.com/library

5b: Set the repository from which to fetch Bill of Materials (BoM) YAML files.

# export TKG_IMAGE_REPO=”projects.registry.vmware.com/tkg”

5c: If your private registry uses a self-signed certificate, provide the CA certificate of the registry in base64 encoded format e.g. base64 -w 0 your-ca.crt

# export TKG_CUSTOM_IMAGE_REPOSITORY_CA_CERTIFICATE=LS0t[…]tLS0tLQ==

This CA certificate is automatically injected into all Tanzu Kubernetes clusters that you create in this Tanzu Kubernetes Grid instance.

Step 6: Generate the publish-images Script

Step 7: Run the publish-images Script

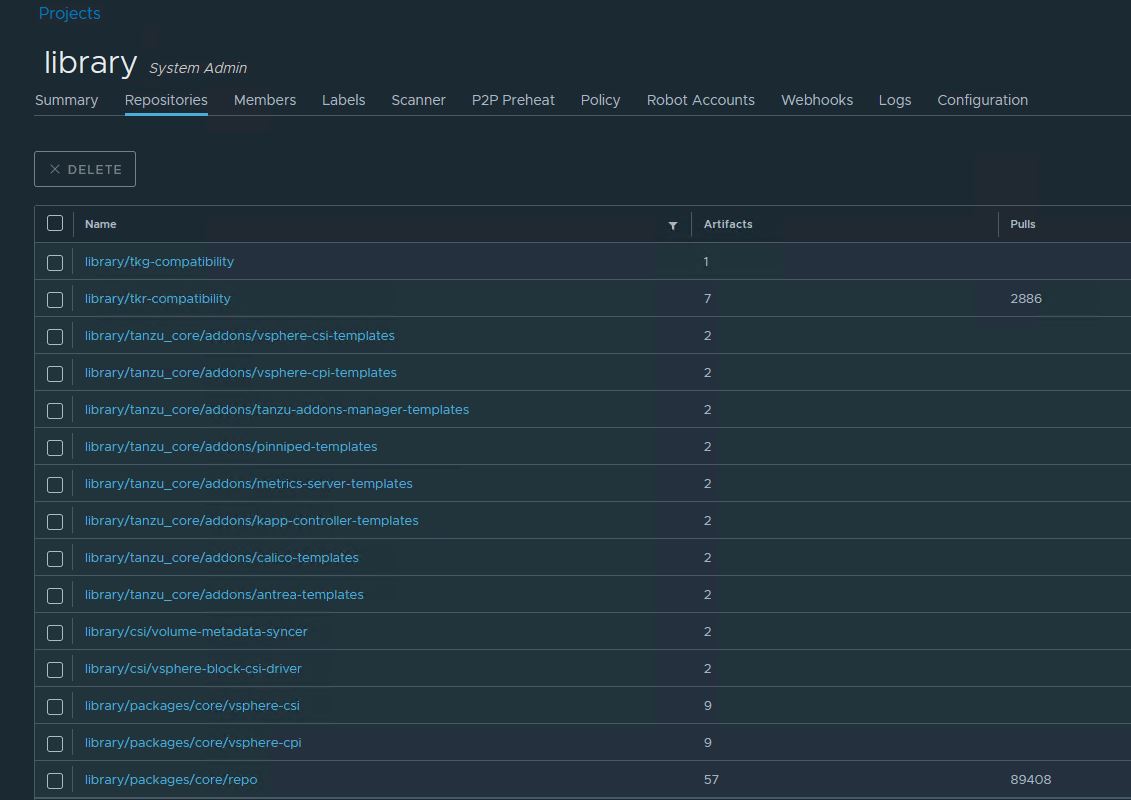

When the script finishes, verify that the harbor registry contains the tkg installation binaries.

Turn off the internet connection on the Linux jumphost once you’ve confirmed the availability of tkg installation binaries.

Step 8: Deploy TKG Management Cluster

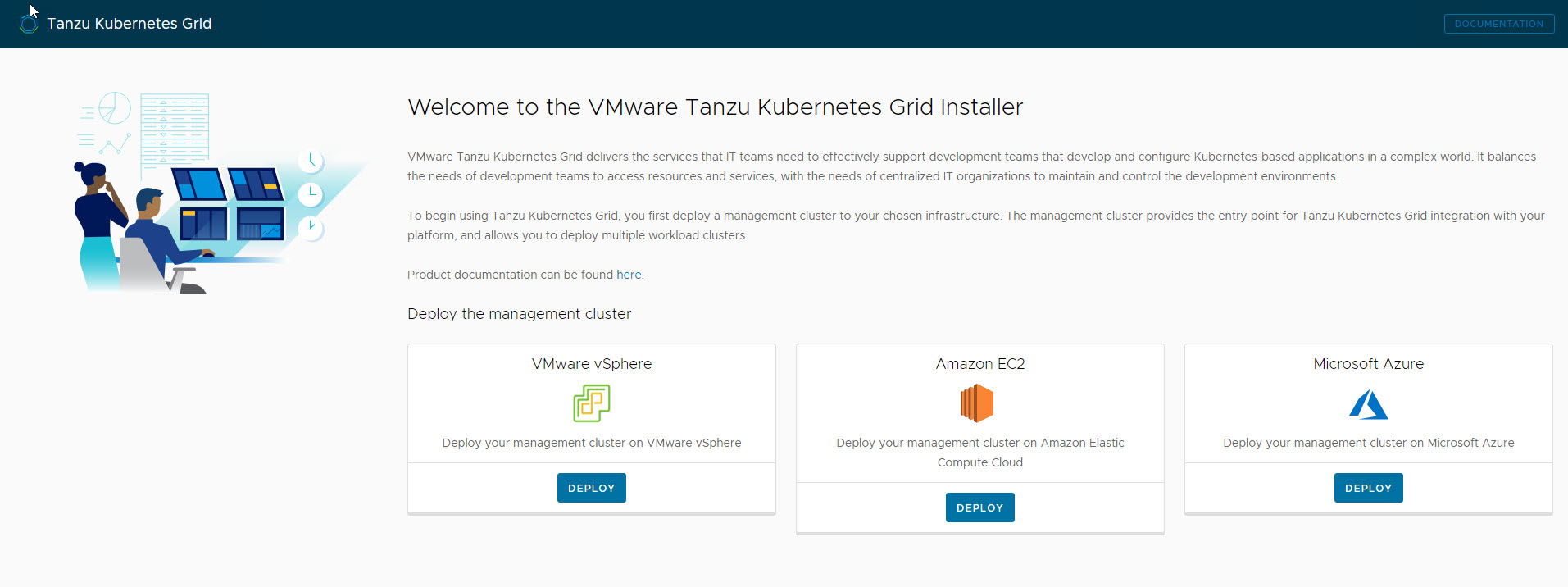

To deploy the TKG Management cluster, start the installer interface by running the below command:

|

1 |

# tanzu management-cluster create --ui --bind <bootstrapper IP:Port> --browser none |

The installer interface launches in a browser and takes you through steps to configure the management cluster.

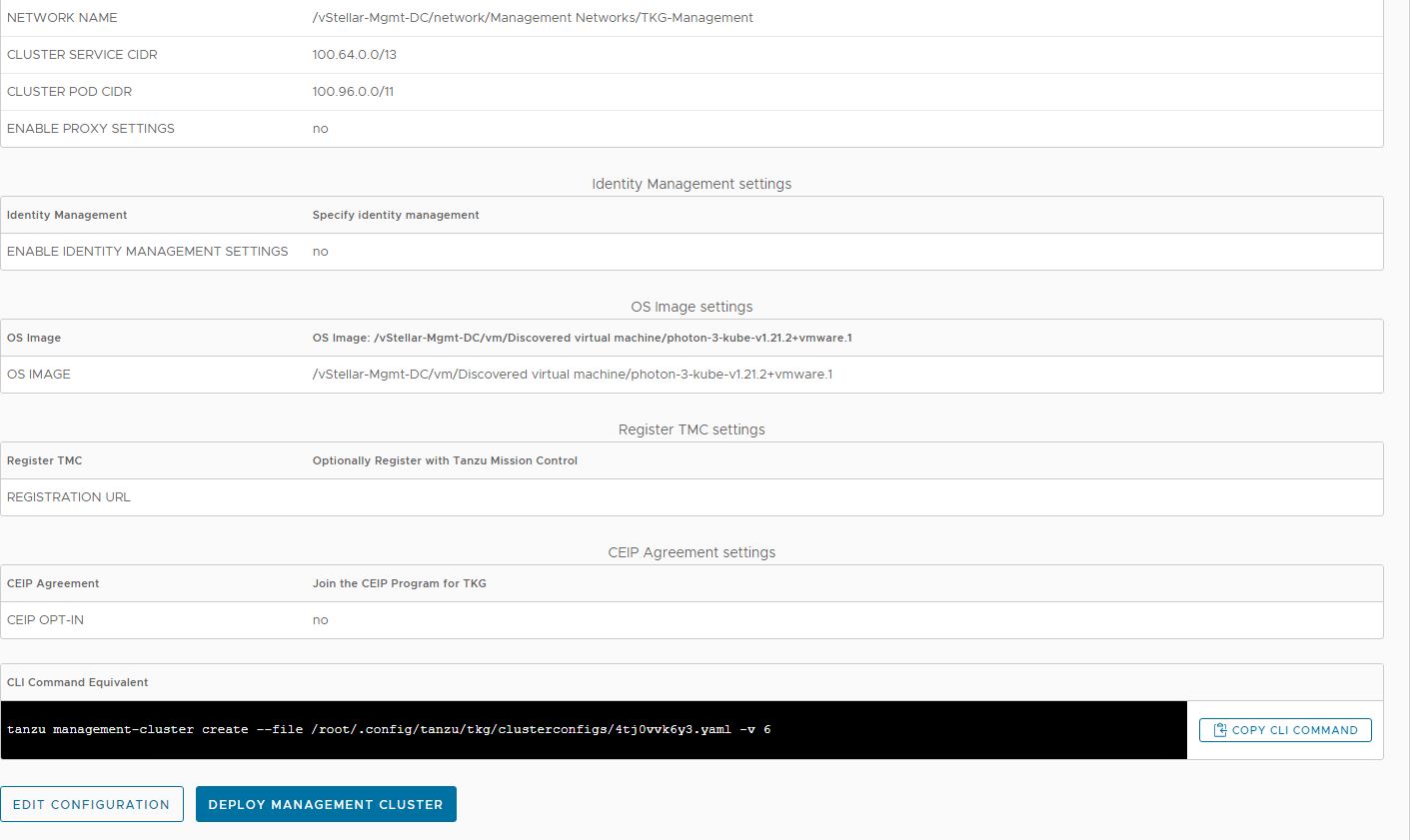

Once you’ve entered all of the necessary information and reached the Review Configuration screen, don’t click the Deploy Management Cluster button; instead, make a note of the location of the cluster config yaml file.

Append the entries for your private registry to the cluster configuration yaml file. An example yaml file is shown below for reference.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

AVI_CA_DATA_B64: LS0tLS1CRUdJTiBDjlVSVElGSUNBVEUtLS0tLQo= AVI_CLOUD_NAME: tkgvsphere-cloud01 AVI_CONTROLLER: 172.16.80.10 AVI_DATA_NETWORK: SS-VIP-NW AVI_DATA_NETWORK_CIDR: 172.16.86.0/24 AVI_ENABLE: 'true' AVI_LABELS: | 'type': 'management' AVI_PASSWORD: <encoded:Vk13YXJlMSE=> AVI_SERVICE_ENGINE_GROUP: tkgvsphere-tkgmgmt-group01 AVI_USERNAME: admin CLUSTER_CIDR: 100.96.0.0/11 CLUSTER_NAME: tkg14-mgmt-mj CLUSTER_PLAN: prod ENABLE_CEIP_PARTICIPATION: 'true' ENABLE_MHC: 'true' IDENTITY_MANAGEMENT_TYPE: none INFRASTRUCTURE_PROVIDER: vsphere SERVICE_CIDR: 100.64.0.0/13 TKG_HTTP_PROXY_ENABLED: false DEPLOY_TKG_ON_VSPHERE7: 'true' VSPHERE_DATACENTER: /vStellar-Mgmt-DC VSPHERE_DATASTORE: /vStellar-Mgmt-DC/datastore/Mgmt-vSAN VSPHERE_FOLDER: /vStellar-Mgmt-DC/vm/tkg-vsphere-tkg-mgmt VSPHERE_NETWORK: TKG-Management VSPHERE_PASSWORD: <encoded:Vk13YXJlMSE=> VSPHERE_RESOURCE_POOL: /vStellar-Mgmt-DC/host/Mgmt-CL01/Resources/tkg-vsphere-tkg-Mgmt VSPHERE_SERVER: mgmt-vc01.vstellar.local VSPHERE_SSH_AUTHORIZED_KEY: ssh-rsa AAAAB3NzaC1yc2EAAAADAQZKPCNLO2zihRBcSw== administrator@vsphere.local VSPHERE_USERNAME: administrator@vsphere.local CONTROLPLANE_SIZE: medium WORKER_SIZE: medium VSPHERE_INSECURE: 'true' AVI_CONTROL_PLANE_HA_PROVIDER: 'true' ENABLE_AUDIT_LOGGING: 'true' OS_ARCH: amd64 OS_NAME: photon OS_VERSION: '3' AVI_MANAGEMENT_CLUSTER_VIP_NETWORK_NAME: TKG-Cluster-VIP AVI_MANAGEMENT_CLUSTER_VIP_NETWORK_CIDR: 172.16.83.0/24 TKG_CUSTOM_IMAGE_REPOSITORY: registry.vstellar.local/library TKG_CUSTOM_IMAGE_REPOSITORY_SKIP_TLS_VERIFY: 'False' TKG_CUSTOM_IMAGE_REPOSITORY_CA_CERTIFICATE: LS0tLS1CRUdJTiBDRRJRklDQVRFLS0tLS0K |

To deploy the management cluster, execute command: tanzu management-cluster create -f <cluster-config-file> -v 6

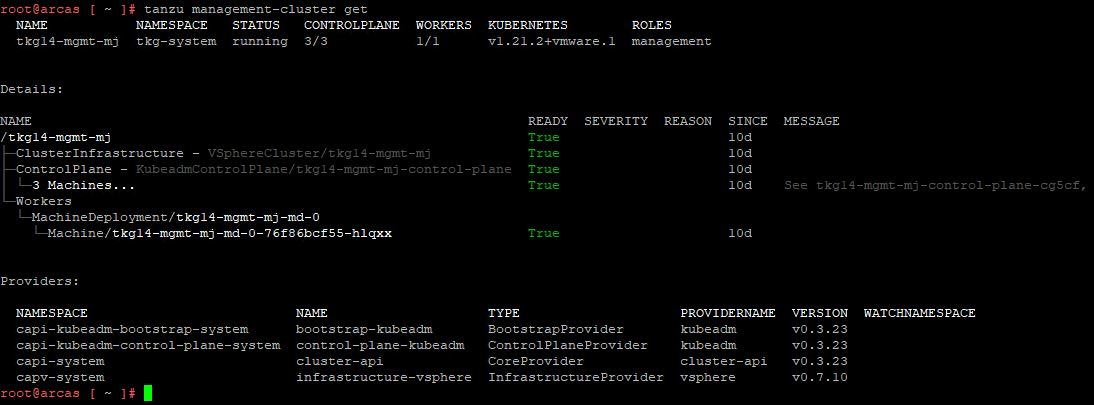

Once the management cluster is deployed, verify the health of the cluster by running the command: tanzu management-cluster get

Issues and Troubleshooting

1: Jumphost is unable to push tkg binaries to the harbor because of the self-signed certificate.

On the jumphost vm, navigate to the /etc/docker directory and execute the command: mkdir -p certs.d/<harbor-ip or fqdn>. In my lab, I’ve set up the harbor to use fqdn, so the directory structure looks like this.

|

1 2 3 4 5 6 |

[root@tkg-jump etc]# tree docker/ docker/ ├── certs.d │ └── registry.vstellar.local │ └── ca.crt └── key.json |

Copy the <cert>.crt file from harbor registry and place it in the harbor directory which you just created and restart docker service.

2: If cert-manager or any other pod is stuck in ImagePullBackOff state and you are seeing errors similar to as shown below:

|

1 2 3 4 5 6 7 8 |

Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 18m default-scheduler Successfully assigned cert-manager/cert-manager-595b5c4f75-b2gxl to tkg-kind-c6sbhhiinqel5l99rhq0-control-plane Normal Pulling 14m (x4 over 18m) kubelet Pulling image "registry.vstellar.local/library/cert-manager-controller:v1.1.0_vmware.1" Warning Failed 13m (x4 over 17m) kubelet Failed to pull image "registry.vstellar.local/library/cert-manager-controller:v1.1.0_vmware.1": rpc error: code = Unknown desc = failed to pull and unpack image "registry.vstellar.local/library/cert-manager-controller:v1.1.0_vmware.1": failed to resolve reference "registry.vstellar.local/library/cert-manager-controller:v1.1.0_vmware.1": failed to do request: Head https://registry.vstellar.local/v2/library/cert-manager-controller/manifests/v1.1.0_vmware.1: dial tcp: lookup registry.vstellar.local: no such host Warning Failed 13m (x4 over 17m) kubelet Error: ErrImagePull Warning Failed 13m (x6 over 17m) kubelet Error: ImagePullBackOff Normal BackOff 2m55s (x48 over 17m) kubelet Back-off pulling image "registry.vstellar.local/library/cert-manager-controller:v1.1.0_vmware.1" |

Create vsphere-overlay.yaml file for manually propagating harbor IP and fqdn in the /etc/hosts file of the control plane and worker nodes of the TKG clusters. Save the yaml file in the directory “~/.config/tanzu/tkg/providers/infrastructure-vsphere/ytt”.

A sample yaml is shown below for reference.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

#@ load("@ytt:overlay", "overlay") #@overlay/match by=overlay.subset({"kind":"KubeadmControlPlane"}) --- apiVersion: controlplane.cluster.x-k8s.io/v1alpha3 kind: KubeadmControlPlane spec: kubeadmConfigSpec: preKubeadmCommands: #! Add nameserver to all k8s nodes #@overlay/append - printf "\n172.16.81.30 registry.vstellar.local" >> /etc/hosts #@overlay/match by=overlay.subset({"kind":"KubeadmConfigTemplate"}) --- apiVersion: bootstrap.cluster.x-k8s.io/v1alpha3 kind: KubeadmConfigTemplate spec: template: spec: preKubeadmCommands: #! Add nameserver to all k8s nodes #@overlay/append - printf "\n172.16.81.30 registry.vstellar.local" >> /etc/hosts |

Redeploy the TKG cluster and it should go through.

That’s it for this post. I hope you enjoyed reading this post. Feel free to share this on social media if it is worth sharing.