This blog post explains how to resize (horizontal scale) a CSE provisioned TKGm cluster in VCD.

In my lab, I deployed a TKGm cluster with one control plane and one worker node.

|

1 2 3 4 |

[root@cse ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION mstr-4fxl Ready control-plane,master 21h v1.21.2+vmware.1 node-cstl Ready <none> 21h v1.21.2+vmware.1 |

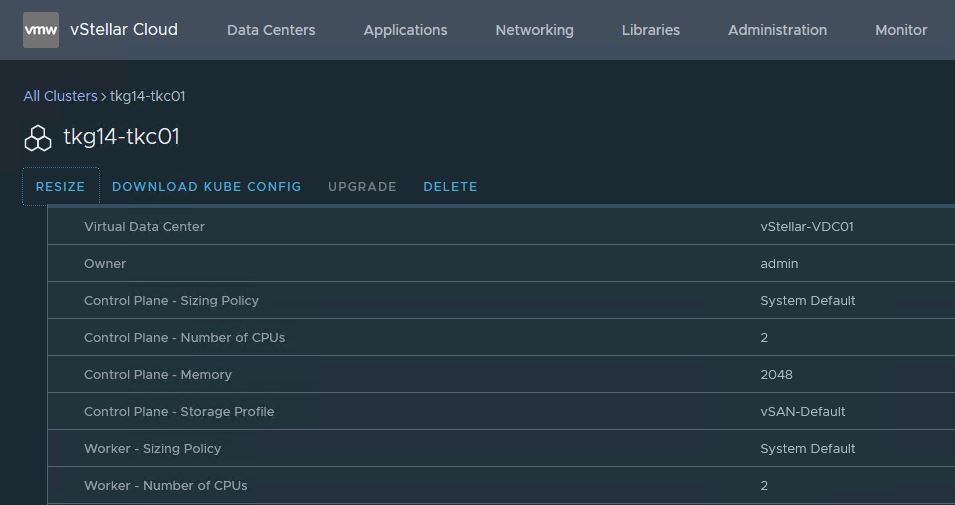

To resize the cluster through the VCD UI, go to the Kubernetes Container Clusters page and select the TKGm cluster to resize. Click on the Resize option.

Select the number of worker nodes you want in your TKGm cluster and click the Resize button.

And boom…..the operation failed immediately with an error as shown in the below screenshot.

I began my investigation by inspecting the cse-server-debug.log file, and to my astonishment, there were no log entries in the file. That’s strange.

On further investigation, I came across this blog post by Hugo Phan who is working as Staff Solutions Architect in our team. Hugo had the same issue as mine.

The reason for this failure is “vcd cse cluster resize” command is not enabled if your CSE server is using “legacy_mode: false”

How do I fix this issue?

The below steps shows the procedure for fixing the cluster resize issue.

Step 1: Login to VCD as tenant org-admin using vcd-cli.

|

1 2 |

[root@cse ~]# vcd login vcd.manish.lab vStellar mjha -i -w -p VMware1! mjha logged in, org: 'vStellar', vdc: 'vStellar-VDC01' |

Step 2: List provisioned TKGm clusters.

|

1 2 3 4 |

[root@cse ~]# vcd cse cluster list Name Org Owner VDC K8s Runtime K8s Version Status ----------- -------- ------- -------------- ------------- --------------------- ---------------- tkg14-tkc01 vStellar admin vStellar-VDC01 TKGm TKGm v1.21.2+vmware.1 CREATE:SUCCEEDED |

Step 3: Obtain the information about the cluster that you want to resize.

# vcd cse cluster info <cluster-name>

The output of the command returns a yaml code containing the cluster info.

Step 4: Prepare an updated cluster config file.

Copy the output of the vcd cse cluster info command and paste it into your preferred editor. Remove the entire section beginning with the word “status.”

Change the value of workers: count the number of workers nodes you want to have and save the yaml file. A sample yaml is shown below for reference.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 |

apiVersion: cse.vmware.com/v2.0 kind: TKGm metadata: name: tkg14-tkc01 orgName: vStellar site: https://vcd.manish.lab virtualDataCenterName: vStellar-VDC01 spec: distribution: templateName: ubuntu-2004-kube-v1.21.2+vmware.1-tkg.1-7832907791984498322 templateRevision: 1 settings: network: cni: null expose: true pods: cidrBlocks: - 100.96.0.0/11 services: cidrBlocks: - 100.64.0.0/13 ovdcNetwork: App-NW rollbackOnFailure: true sshKey: ssh-rsa AAAAB3NzaC1yc2EAA== manishj@vmware.com topology: controlPlane: count: 1 cpu: null memory: null sizingClass: System Default storageProfile: vSAN-Default nfs: count: 0 sizingClass: null storageProfile: null workers: count: 3 cpu: null memory: null sizingClass: System Default storageProfile: vSAN-Default |

Step 5: Resize TKGm Cluster

Apply the updated cluster config file to initiate cluster resize.

|

1 2 3 4 |

[root@cse ~]# vcd cse cluster apply scale-tkg14-tkc01.yaml Run the following command to track the status of the cluster: vcd task wait 3af27ac0-003c-4f6e-9603-b91978aff914 |

CSE initiates the deployment of additional worker nodes. You can track the status under the Monitor > Tasks page.

Step 6: Validate Cluster Resize

Run the kubectl get nodes command to validate that the additional worker nodes are deployed and configured and joined the k8 cluster.

|

1 2 3 4 5 6 |

[root@cse ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION mstr-4fxl Ready control-plane,master 21h v1.21.2+vmware.1 node-40ia Ready <none> 4m45s v1.21.2+vmware.1 node-cstl Ready <none> 21h v1.21.2+vmware.1 node-lrhz Ready <none> 2m17s v1.21.2+vmware.1 |

Important Note: CSE 3.1.1 only supports scaling up a TKGm cluster, scale-down is not yet supported.

I hope you enjoyed reading this post. Feel free to share this on social media if it is worth sharing.